|

With the rise of digital fundus photography and optical coherence tomography, retina care has also become increasingly reliant on the diagnostic value of medical imaging. For many clinicians, OCT has become the default diagnostic imaging test for most retinal diseases, while reading- center grading of color fundus photographs remains the preferred method for monitoring disease activity in large clinical trials. CAD has been investigated for a number of retinal diseases including age-related macular degeneration, diabetic retinopathy (DR) and pathologic myopia,3,4 but no commercial retinal CAD systems are yet available for routine clinical use.

Because of both an aging population and the global diabetes mellitus epidemic, the prevalence of both AMD5 and DR6 are set to increase in the coming decades, as will the workload of retina specialists. Beyond the ophthalmologist’s clinic, interest is mounting in photographic screening programs for DR in primary care settings. Universal adoption of such programs in the United States would necessitate interpretation of an estimated 32 million images annually.7 Improvements in CAD via deep learning may provide a way to approach these new challenges.

Deep Learning in Retinal Image Analysis

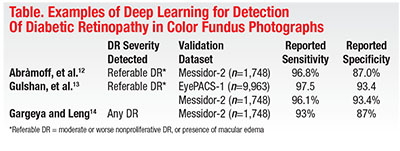

Much of the existing literature on deep learning in computer diagnosis of retinal disease has focused on DR detection in fundus photography. Many authors have applied deep learning to issues that include segmentation, quality assessment or feature detection, while others have attempted to train AI to detect and classify disease (Table).8

Michael Abràmoff, MD, PhD, and colleagues at the University of Iowa demonstrated in 2016 that the addition of deep-learning methods to an existing DR detection algorithm resulted in greatly improved performance for detection of referable DR.9 Shortly thereafter, investigators from Google published a report describing results achieved with a deep-learning algorithm trained by using over 100,000 images to identify referable DR.10 The algorithm was validated using two independent datasets (EyePACS-1, n=9,963; and Messidor-2, n=1,748), and the majority opinion of a panel of ophthalmologists as a reference standard. Impressively, at an operating point selected for sensitivity, sensitivity was 97.5 percent and 96.1 percent and specificity was 93.4 percent and 93.9 percent for EYEPACS-1 and Messidor-2, respectively. This year, Rishab Gargeya, a Davidson Fellows Scholar, joined Theodore Leng, MD, MS, at Byers Eye Institute, Stanford University, to demonstrate that automated detection of DR using deep learning can be extended to include detection of mild DR, in addition to referable DR.11

Given promising results in color fundus photography, extension of deep learning techniques to OCT analysis may be a reasonable next step. Some efforts have been undertaken to this end, with applications to both segmentation and disease diagnosis.12–15 For example, Cecelia Lee, MD, MS, and colleagues at the University of Washington, Seattle, have demonstrated that deep learning can effectively

|

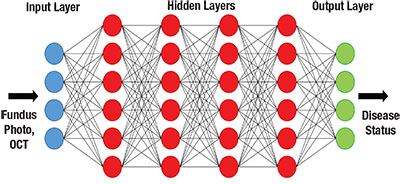

| Figure. Simplified schematic of a deep neural network. |

Notably, images and clinical data and notes the study authors used to determine AMD status were automatically extracted from an electronic medical record (EMR) database, demonstrating another key contributor to the growth of deep learning: the ever-increasing availability of datasets that are sufficiently large enough to train neural networks.

Man vs. Machine: Closing the Gap

The field of artificial intelligence (AI) was born in the summer of 1956 at Dartmouth College, based on the idea that machines could be made to simulate human intelligence. Substantial progress in the field over the decades has naturally given rise to a key question: Is it possible to develop AI that can equal or surpass the capabilities of humans?

For the ancient Chinese game of Go, this ideal was, for many years, considered out of reach. Despite its simple rules, many have described Go as the most complex classical strategy game in history. Indeed, even following the successes of AI in chess, including the famous 1997 defeat of Grandmaster Garry Kasparov by IBM’s Deep Blue, Go engines struggled to defeat even moderately skilled players, let alone professionals.17

However, this changed in unprecedented fashion in 2016, when Google DeepMind’s AlphaGo18 defeated Lee Sedol, among the world’s best Go players, in a highly publicized five-game series. One of the key factors in this groundbreaking achievement was the utilization of a technique known as deep learning.19

A Glimpse Ahead

Although considerable work remains to be done with respect to further validating, optimizing and generalizing these algorithms, the overall trend of the data available thus far seems promising. Although still somewhat premature, it is interesting to consider the possibilities and consequences that widespread deployment of deep-learning algorithms in retina care could bring.

In the clinic, assisted or automated interpretation of images could improve the efficiency of image reading and allow physicians more direct face-time with patients. Beyond the clinic, the low cost and high processing capacity of these systems may open doors in areas such as telemedicine, disease screening or monitoring, and even research. In the latter case, for example, deep-learning algorithms could improve objectivity and reproducibility in both prospective and retrospective studies by serving as a universal standard for image grading in situations where centralized reading-center grading is not feasible or accessible.

Yet, deep learning in medicine is not without its own unique challenges. For example, the scale and complexity of deep-learning algorithms makes them a “black box;” while physicians interpret images based on sets of defined features and their relationship with disease, neither physicians nor programmers can know exactly how the algorithms reach their final conclusions. Although this would hardly be the first instance of the medical community adopting a tool whose mechanism is poorly understood, some degree of apprehension toward the potential integration of deep learning is likely inevitable, given its computerized nature.

At the same time, developers of these algorithms are not completely blind to the inner workings of the “black box.” Some groups have generated heat maps that highlight the regions on each image that were the most influential on the decision of the algorithm, providing a much-needed link between man and machine.11,15 Another potential confounding issue is that of automation bias: a tendency for individuals to agree with the computer’s decision, for better or for worse. It would not be unreasonable to suspect that this effect would become more pronounced as automation reaches parity with (or perhaps even superiority over) humans, but it is clear that additional research on this phenomenon is needed.16

Ultimately, although there are still many challenges ahead, AI looks like it is here to stay. The big question now emerging is that of how the health-care system as a whole is going to adapt to the ever-increasing incorporation of this technology. If the transition to EMR is any indication, it could very well become the case that AI integration will eventually transform from a luxury into a necessity. Public trust in the healthcare system is likely to be a crucial factor in this process, and accordingly, physicians and other healthcare providers should prepare to confidently take the lead in facilitating these changes.

What is Deep Learning?

Deep learning employs architectures known as artificial neural networks (ANNs), which are systems of interconnected units (“neurons”) modeled after the neuronal connections in the

|

By adjusting the strengths (weights) of these connections based on existing data, the ANN can be “trained” to perform at a specific task, such as image recognition. Deep neural networks (hence ”deep” learning) contain multiple intermediate (hidden) layers between the basic input and output layers, with additional layers facilitating hierarchical feature abstraction—for example, progressing from pixels to lines to shapes and so on.

Historically, training deep neural networks was considered to be impractical, but advances in methodology and hardware have spurred a re-emergence of interest in these techniques and brought about exciting advances in many disciplines.

Take-home Point

Artificial intelligence (AI) has made significant inroads in medicine, particularly with the advent of deep learning. In retina, this has led to advances in automated analysis of digital fundus photography and optical coherence tomography. This article explores the state of deep learning in the diagnosis of retinal disease, some of the challenges facing broader adoption of AI in the field and the possibilities and consequences of deep learning in retina care. RS

REFERENCES

1. Willems JL, Abreu-Lima C, Arnaud P, et al. The diagnostic performance of computer programs for the interpretation of electrocardiograms. N Engl J Med. 1991;325:1767-1773.

2. van Ginneken B, Schaefer-Prokop CM, Prokop M. Computer-aided diagnosis: How to move from the laboratory to the clinic. Radiology. 2011;261:719-732.

3. Mookiah MRK, Acharya UR, Chua CK, Lim CM, Ng EYK, Laude A. Computer-aided diagnosis of diabetic retinopathy: A review. Comput Biol Med. 2013;43:2136-2155.

4. Zhang Z, Srivastava R, Liu H, et al. A survey on computer aided diagnosis for ocular diseases. BMC Med Inform Decis Mak. 2014;14:80.

5. Wong WL, Su X, Li X, et al. Global prevalence of age-related macular degeneration and disease burden projection for 2020 and 2040: A systematic review and meta-analysis. Lancet Glob Health. 2014;2:e106-116.

6. Saaddine JB, Honeycutt AA, Narayan KMV, Zhang X, Klein R, Boyle JP. Projection of diabetic retinopathy and other major eye diseases among people with diabetes mellitus: United States, 2005-2050. Arch Ophthalmol. 2008;126:1740-1747.

7. Abràmoff MD, Niemeijer M, Russell SR. Automated detection of diabetic retinopathy: Barriers to translation into clinical practice. Expert Rev Med Devices. 2010;7:287-296.

8. Litjens G, Kooi T, Bejnordi BE, et al. A Survey on Deep Learning in Medical Image Analysis. Med Image Anal. 2017;42:60-88.

9. Abràmoff MD, Lou Y, Erginay A, et al. Improved automated detection of diabetic retinopathy on a publicly available dataset through integration of deep learning. Invest Ophthalmol Vis Sci. 2016;57:5200-5206.

10. Gulshan V, Peng L, Coram M, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316:2402-2410.

11. Gargeya R, Leng T. Automated identification of diabetic retinopathy using deep learning. Ophthalmology. 2017;124:962-969.

12. Fang L, Cunefare D, Wang C, Guymer RH, Li S, Farsiu S. Automatic segmentation of nine retinal layer boundaries in OCT images of non-exudative AMD patients using deep learning and graph search. Biomed Opt Express. 2017;8:2732-2744.

13. Lee CS, Tyring AJ, Deruyter NP, Wu Y, Rokem A, Lee AY. Deep-learning based, automated segmentation of macular edema in optical coherence tomography. Biomed Opt Express. 2017;8:3440-3448.

14. El Tanboly A, Ismail M, Shalaby A, et al. A computer-aided diagnostic system for detecting diabetic retinopathy in optical coherence tomography images. Med Phys. 2017;44:914-923.

15. Lee CS, Baughman DM, Lee AY. Deep learning is effective for classifying normal versus age-related macular degeneration OCT images. Ophthalmol Retina. 2017;1:322-327.

16. Goddard K, Roudsari A, Wyatt JC. Automation bias: A systematic review of frequency, effect mediators, and mitigators. J Am Med Inform Assoc. 2012;19:121-127.

17. Müller M. Computer Go. Artif Intell. 2002;134:145-179.

18. Silver D, Huang A, Maddison CJ, et al. Mastering the game of Go with deep neural networks and tree search. Nature. 2016;529:484-489.

19. LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436-444.